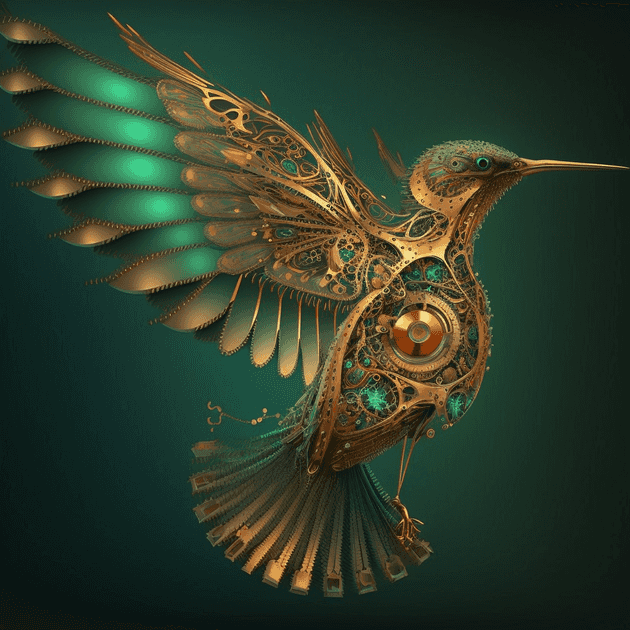

I am a consistent though not espeically intensive user of Midjourney, a system for generating images from combinations of text and image prompts. I’ve generated around 800 images over the last few months, a pittance compared to Midjourney’s most prolific users. I’d guess I’m in something like the 30th percentile among users who have generated at least 10 images.

The interface today is that you write your prompt as a command to a Discord bot. This is the only generally available way to use Midjourney, though there are rumors about a new web interface under development. There are many obvious things left to be desired in the current interface, and I don’t think they’re interesting to enumerate, but in this post, despite my relatively limited experience, I want to discuss a few high level features I’d like to see in future image generation interfaces.

History and branching

The fundamental interaction loop of all image generation systems I have used is to write out a prompt, see what is generated, then tweak the prompt and iterate.

In Midjourney today, tweaking the prompt means copying and pasting it from a previous message in the chat history, but other interfaces, like AUTOMATIC1111’s Stable Diffusion Web UI have a single text box where you can edit the prompt between generations without any copying and pasting, but from what I can tell, there’s no way to see past iterations of the prompt.

Because this iteration loop is so central, history should be a first-class citizen. Tweaking a prompt shouldn’t be destructive. You should be able to scroll through past iterations of the prompt and see any images generated with each. Midjourney gets this right, with the conversation history forming a log of prompts and generated images, but it doesn’t track the ancestry of each prompt, so all prompts and images from possibly different threads of iteration get merged together into a single unstructured log.

Not all steps in the iteration are equally meaningful. I find that I occasionally hit on a prompt that clicks, where the model starts reliably giving me the kinds of images I want. When I hit on one of these, I’ll often generate many images from it and do lots of upscales, and when I want to try out a new direction, I’m usually forking from one of these anchor prompts. I imagine being able to mark such prompts as anchors in the interface. In any visualization of history tree, these would be the major nodes, perhaps also with a way to see the fine-grained iterations between anchors.

Chunk library

A huge part of the process of using text-to-image models is discovering chunks of prompt text that reliably elicit a particular subject, style, or other visual feature from the model. These chunks are often composable with other hcunks and reusable across projects. Naturally, then, it should be possible to add chunks to a library that is easily accessible while iterating on a prompt.

As a chunk starts to get used across different projects, there will be multiple, diverse examples of the kinds of images that are generated when that chunk is used. These past generated examples would serve as an ideal at-a-glance preview of what the chunk could might do for your image. One could imagine mechanisms for choosing a small but diverse set of images generated using the chunk, making sure the preview is as informative as possible.

There is a vast space of possible chunks, and it’s easy to imagine a personal library of hundreds or thousands of chunks. This calls for some mechanism for organization. Different chunks play different roles in the prompt, as mentioned above, so separating subject-oriented chunks from style-oriented chunks for instance would be a natural first step. Going beyond that though, one could imagine richer organizational schemes, like nested folders or even many-to-many tags.

And why stop at only browsing through your own chunks? Users could build up a collective chunk library that individual users could pull into their personal libraries. Perhaps users could even be rewarded, whether in clout or currency, for contributing widely used chunks.

As a potential extension, maybe chunks could contain other chunks? The rich compositional structures this would enable in prompts me be lost on today’s models, which often struggle with prompts like “girl with glasses holding hands with boy wearing a green sweater”, for instance putting the sweater on the girl and the glasses on the boy, but I don’t expect this limitation to be permanent.

Generating collections

Because it takes so much less effort to generate an image than it does to paint a painting or even snap a photo, I often find myself wanting to create collections rather than individual images. I might start out with an idea for a single image, but while iterating on it, notice that there is something interesting about the juxtaposition of multiple images. A single character might become a cast, a single artifact a civilization, or a single landscape a world.

When the goal is to build a complete collection, there is often substantial overlap in the prompts for each element of the collection. If I’m making a collection of characters, say a pantheon of fruit, there are generally character-specific parts of the prompt, the individual fruit gods and goddesses and their individual features, and other parts that are shared across the whole collection, like whether I want the style of a concept photoshoot, marble sculptures, or paintings. I might switch back and forth between iterating on individual characters and iterating on the style of the entire collection.

Today, iterating on the collection-level parts of the prompt means regenerating all of the characters, or, in practice, since that takes a long time, iterating on the collection-parts while seeing the effects on only one character, then regenerating all the characters only when I have made substantial progress.

I could imagine a system where I can insert variables into the prompt, and

specify a set of values that variable might take on. The variable

$fruit_divinity might take on values like “High Lord of Oranges” or “Princess

of Passionfruit”.

Extending from here, collections don’t necessarily need to be static or fully enumerated. Variables could be anything. The current time of day, a randomly selected country, a description of the day’s weather, the first proper noun found on the front page of the New York Times, or any other computable string.

We’re at the very beginning of a new, inexhausible medium for artistic expression. I feel like someone in the 1840s writing up my little wishlist of features for next year’s model of the deguerrotype. What an incredible time to be alive.